Google Rolls Out Gemini, the Most Powerful AI Model

- The Guardian

Jakarta – Google officially rolled out the most powerful artificial intelligence (AI) model called Gemini. This Google-made AI is said to be able to rival ChatGPT.

Gemini is Google's latest big language model, first announced by Sundar Pichai at the I/O developer conference last June and now publicly launched.

According to Pichai and Google DeepMind CEO Demis Hassabis, this is a huge leap in AI models that will eventually affect almost all Google products.

"One of the powerful things about today is that you can work on one basic technology and make it better, and it flows directly into all our products," Sundar Pichai said.

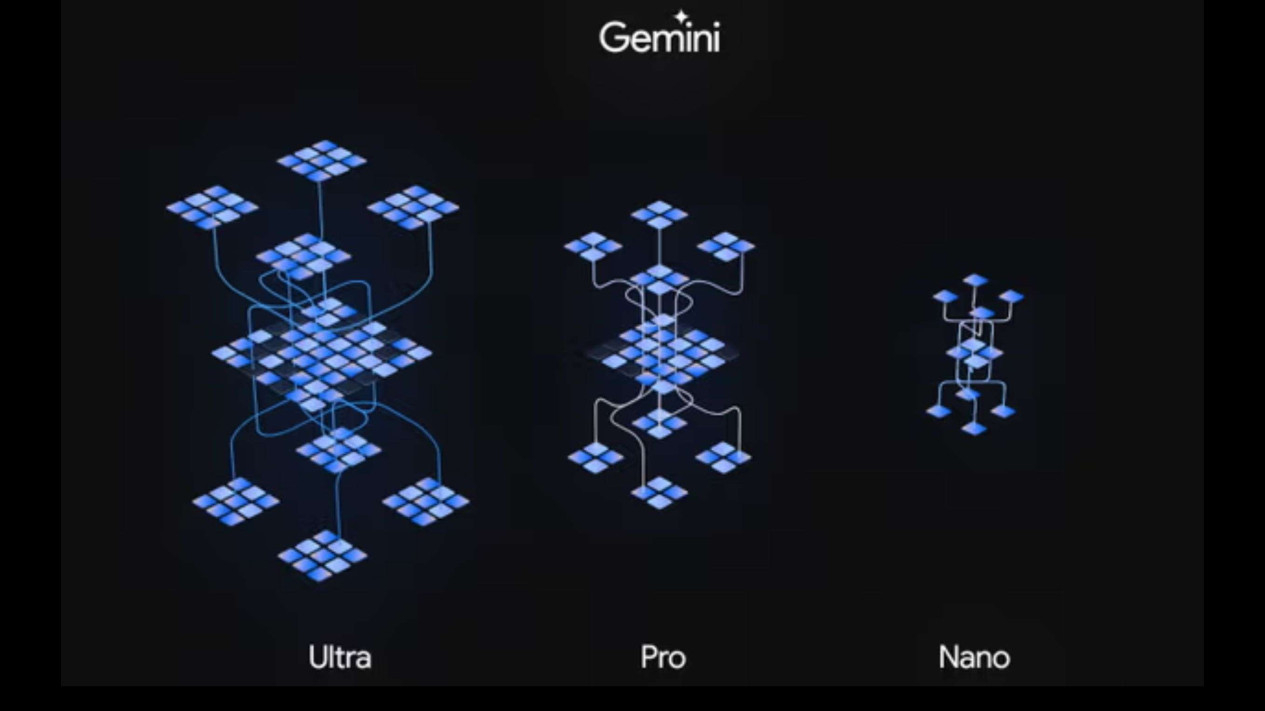

Gemini is not just one AI model. There is a lighter version called Gemini Nano which is intended to run natively and offline on Android devices.

There's a more powerful version called Gemini Pro that will soon power many of Google's AI services and be the backbone of Bard.

There's a more capable model called Gemini Ultra which is the most powerful LLM (Large Language Model) model ever made by Google and seems to be designed primarily for data centers and enterprise applications.

Google launched these models in several ways at the moment, Bard is now supported by Gemini Pro, and Pixel 8 Pro users will get some new features thanks to Gemini Nano, (Gemini Ultra is coming next year).

Developers and enterprise customers will be able to access Gemini Pro through Google Generative AI Studio or Vertex AI on Google Cloud starting December 13, 2023.

Gemini is only available in English for now, with other languages to follow soon. But, Sundar Pichai said that it will eventually be integrated into Google's search engine, its advertising products, Chrome browser, and more, worldwide.

OpenAI launched ChatGPT a year ago, and the company and its products quickly became the biggest thing in AI.

For now, Google is trying to create most of the underlying technology behind the current AI boom, having called itself an "AI-first" organization for nearly a decade, and was so surprised by how good ChatGPT was and how quickly OpenAI's technology took over the industry, Google is finally ready to fight back.

"We've done a very deep analysis of these two systems together, and done benchmark testing," Hassabis said.

Google now runs 32 existing benchmarks to compare the two models, ranging from overall tests such as Multi-task Language Understanding to tests that compare the ability of the two models to generate Python code.

"I think we're far ahead in 30 of those 32 benchmarks," Hassabis said.

"Some of them are very narrow. Some of them are bigger," he added.

In those benchmarks (most of which are very close), Gemini's biggest advantage comes from its ability to understand and interact with video and audio.

This is very deliberate, multimodality has been part of Gemini's plan from the start. Google didn't train separate models for image and sound, like OpenAI did for DALL-E and Whisper, they built one multisensor model from scratch.

"We've always been interested in very general systems," says Hassabis.

He was particularly interested in how to combine all those modes to collect as much data as possible from a number of inputs and senses, and then provide a response with the same variety.

Currently, the basic Gemini model is text input and text output, but more advanced models like Gemini Ultra can work with images, video and audio.